On March 6,eroticism lorde Alibaba released and open-sourced its new reasoning model, QwQ-32B, featuring 32 billion parameters. Despite being significantly smaller than DeepSeek-R1, which has 6,710 billion parameters (with 3.7 billion active), QwQ-32B matches its performance in various benchmarks. QwQ-32B excelled in math and coding tests, outperforming OpenAI’s o1-mini and distilled versions of DeepSeek-R1. It also scored higher than DeepSeek-R1 in some evaluations like LiveBench and IFEval. The model leverages reinforcement learning and integrates agent capabilities for critical thinking and adaptive reasoning. Notably, QwQ-32B requires much less computational power, making it deployable on consumer-grade hardware. This release aligns with Alibaba’s AI strategy, which includes significant investments in cloud and AI infrastructure. Following the release, Alibaba’s US stock rose 8.61% to $141.03, with Hong Kong shares up over 7%.[Jiemian, in Chinese]

Related Articles

2025-06-27 05:15

2118 views

Preorder the new Anker Soundcore Sleep A30 earbuds with ANC for $159

UPDATE: Jun. 16, 2025, 12:07 p.m. EDT This post has been updated with new photos, plus more informat

Read More

2025-06-27 04:31

1774 views

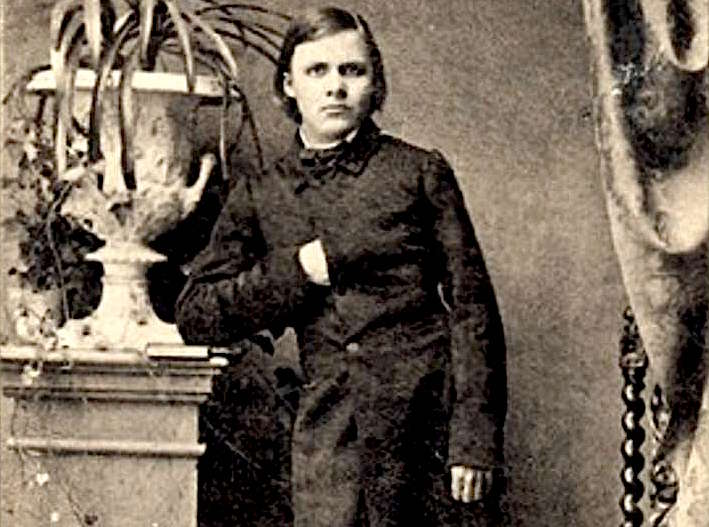

Back to School with Nietzsche

Back to School with the ÜbermenschBy Damion SearlsSeptember 16, 2015On TranslationNietzsche on educa

Read More

2025-06-27 04:15

360 views

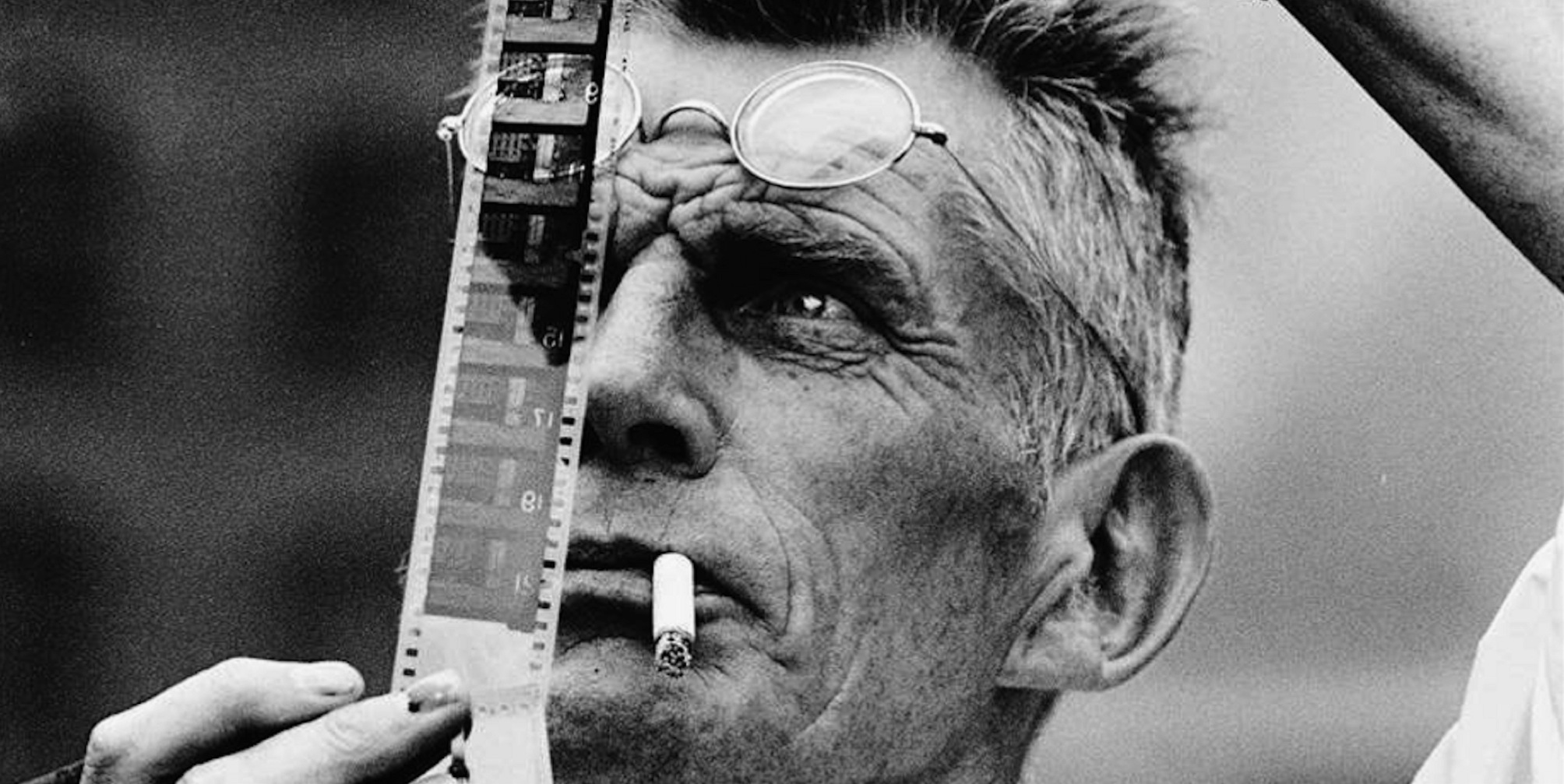

When Samuel Beckett Teamed Up with Buster Keaton

That Time When Beckett Made a Movie, and Other NewsBy Dan PiepenbringSeptember 18, 2015On the ShelfB

Read More